OpenAI's Deep Research and the Future of Learning and Work

What I learned last night about the future of knowledge production, and what this means for the future of learning and work.

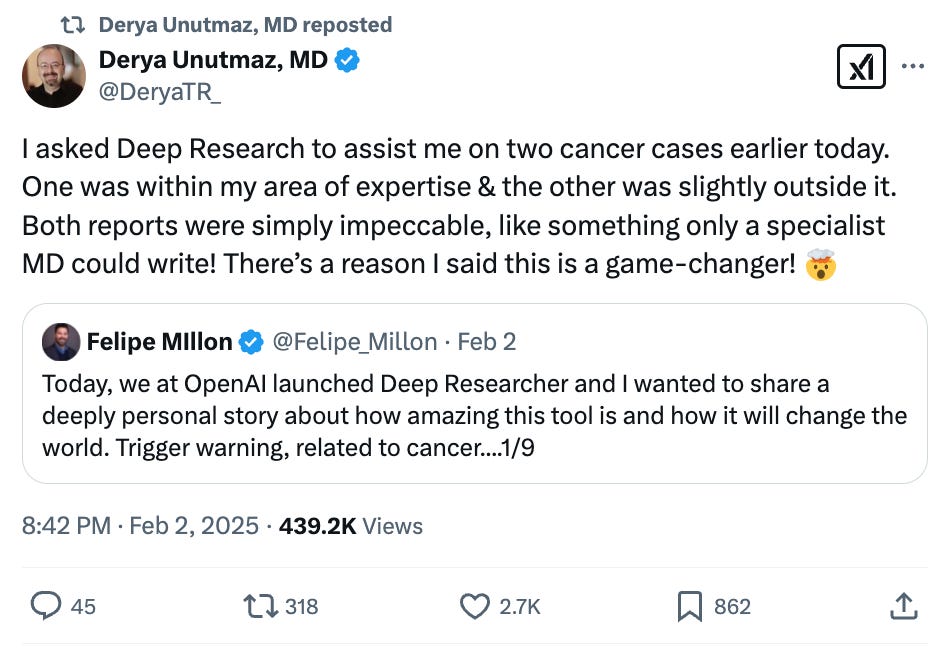

Like other Plus Users of OpenAI’s ChatGPT, yesterday I received initial access to Deep Research. I have been watching my Twitter/X feed carefully for the last few weeks as people shared results ranging from shock and awe to cynicism and dismissal of the ever-evolving “hype machine”.

The primary cause of this wide range of responses, in my experience, are about three things: (1) Quality of your inquiry, (2) Quality of your source materials, and (3) Depth of your expertise and expectations. When you have all three of these aligned the results can be…INCREDIBLE.

My Encounter with Deep Research on the Generative AI and the Future of Work

This is my area of expertise and the object of my attention each and every day. I teach undergraduate and graduate students at numerous institutions, I advise universities, K-12 districts, workforce development agencies, and industry partners about the impacts of generative AI on the future of learning and work. I have a book on AI and libraries coming out this summer from the American Library Association (more about this very soon). In other words, I have my finger on the pulse of these questions, the trends and trajectories, the opportunities and challenges, and I have high expectations for what constructive and useful research looks like in this area.

My Testing Methodology

I currently only have 10 queries per month, so I thought that the most effective way to really push the edge of what I could produce would be to create a kind of split test: a carefully curated report and one that entirely relies upon the capabilities and power of Deep Research.

I used the same prompt for both reports:

Compile a research report on the impact of Generative AI on the future of work. I want the report to focus on the macro level and not be industry specific except in minor, illustrative explanations.

For one report I chose ten (the limit of what you can upload) relevant, high-quality, and comprehensive sources, and for the other I provided no materials at all. (At the end of this piece you will be able to access both the reports and a list of the sources that I provided.)

Here are the major details for each report:

Curated Sources Report

Deep Research reasoned on the curated collection of provided source materials for 21 minutes.

Deep Research asked very limited follow-up questions before initiating its work.

It did not cite sources outside of the collection I provided (which was a surprising development, but actually led to a massive increase in quality and utility).

The report came in at just over 7,200 words.

Novel Search Report

Deep Research surveyed a total of 18 sources and reasoned for 16 minutes before producing its report.

Deep Research additional and more detailed follow-up questions before initiating its work.

It chose all of its sources independently.

The report came in at just under 16,000 words (more than double of the curated report).

Both reports have immediate educational and research value.

The novel report gave me access to source materials that I had not previously been aware of through my own reading and research and helped clarify and provide documentation for larger trends and trajectories in the work of academics, experts, and organizations around some key themes.

The curated report provided a level of focus and clarity that was genuinely shocking. I sat stunned on the couch last night reading late into the night wondering when I would find something that was blatantly wrong or simply unhelpful… and it didn’t happen.

In less than an hour, as a part of my investment of $20 a month, I had nearly 20,000 words of useful, actionable, quality research that I could immediately use in my work.

I have tried this morning to think about how long it would take for me to produce similar materials. For now, I feel like I could produce materials that are superior to the novel report and comparable to the curated report, and that the time it would take me to read, organize, create, and publish parallel versions of these same materials would land somewhere in the 40-50 hours of work.

This may not sound like much, but a closer analysis of my schedule, bandwidth, and capabilities shows otherwise. Let me try to explain…

Literal Time Spent

45 Minutes: Time for prompting, follow-up questions, providing materials (when applicable), Deep Research reasoning and text production.

15 Minutes: Converting the results of these reports (generated in Markdown format) into a document using Bear that could be exported as a PDF for reading and a DOCX for use in other applications (e.g., creating an audio version in Eleven Labs).

180 Minutes: Close reading with annotations and notes of the PDF documents in Readwise Reader.

TOTAL TIME SPENT IN CREATION, FORMATTING, AND CRITICAL ENGAGEMENT: 4 HOURS

Finding 40-50 Hours in My Schedule

As an academic, researcher, and consultant this is a particularly busy time of year. It is the beginning of a Conference Season (which for me is March through June). According to my calendar as it sits today, to locate time in my current schedule for 40-50 hours of concentrated work on this project would have been an incremental approach (an hour or two here and there) and would be completed sometime this Fall.

In other words, while looking for 40-50 hours in my schedule I am meaningfully talking about months of effort (and that is without adding anything to my calendar from now until the Fall!).

HOW LONG WILL IT TAKE ME TO “FIND” 40-50 HOURS OF HIGH-LEVEL WORK ON THIS SPECIFIC PROJECT: 3-6 MONTHS

The implications of this exponential acceleration of learning and knowledge production are hard to wrap my head around. It is another example, in real time, of what Dario Amodei calls “the compressed twenty-first century”. (He is talking about advances in medicine and health, but I think this will be true across nearly all fields.)

The Compounding Implications

Doing a project like this without tools like Deep Research (and a whole host of other incredible GenAI tools I use all the time like LitMaps, Sana, NotebookLM, Google AI Studio, ExplainPaper, and others) means that I might be able to ship 2-3 projects of this scale per year (and this feel somewhat aggressive and even maybe overly optimistic).

With capabilities like this it is conceivable that I could accomplish with an increase in quantity, quality, and utility one or more of these kinds of projects per month.

This means that if these capabilities never advanced any further (and they are just getting started!) I would be able to pivot from 2-3 projects per year to one or more per month.

BEFORE THESE TOOLS: 20-30 PROJECTS IN A DECADE

WITH THESE TOOLS: 120-150 PROJECTS IN A DECADE

WITH INCREASED CAPABILITIES BY THIS TIME NEXT YEAR: ??????????

A Brief Word About the Future of Knowledge Production with Generative AI

I have a lot to say about this in the coming weeks, some of which I explore in my forthcoming book (co-authored with Chris Rosser) entitled, Generative AI and Libraries: Claiming Our Place in the Center of Our Shared Future published by the American Library Association this summer. But here are three immediate takeaways:

The life expectancy of conversations about “allowing” the use of Generative AI in research, teaching, and learning are very short (and should end sooner than everyone thinks).

The people best positioned to leverage these kinds of tools and outputs are those who already possess deep wisdom and expertise in a respective field or fields.

While productivity and efficiency are obvious gains, they should not be the end goal. We should also have real, sustained conversations and reconsiderations of the quality, value, and impact of our knowledge production.

The future of learning and work, especially knowledge work, is already here, and if you know how to leverage it… it is a spectacular moment.

The Actual Deep Research Reports

The Novel Deep Research Report

The Curated Sources Deep Research Report

Below is an audio-version of the Curated Sources Deep Research Report using a cloned version of my voice through ElevenLabs. There are errors and glitches in this audio narration which I have chosen to leave in for three reasons: (1) To remind us that these technologies and technologies are continuing to advance, (2) to note how exceedingly rare they are, and (3) to understand that this was created in about fifteen minutes when the recording, editing, and producing would have undoubtedly taken the better part of a day.